Laws of Robotics

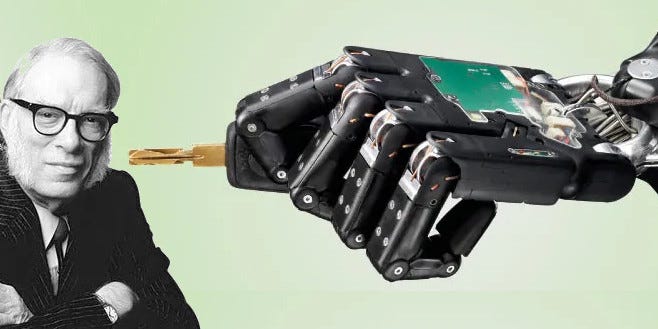

Kant's Laws of Robotics versus Asimov's - whose robots would we prefer to live with...?

“Thus robots/AI would not have any of the rights their owners might care to legislate on their behalf, but they would be no less categorically bound. To this extent, Kant’s laws of robotics might outdo Asimov’s...” - Babette Babich

Asimov’s Laws of Robotics have been accepted in our imaginations as the default binding principles for sentient robots... if we ever get such things. For all the fuss made over ChatGPT, the most sophisticated chatbot ever to hoodwink humans, there is still no sign that sentient robots are even possible with our kinds of computing techniques - much less plausible. The flat refusal to accept this ranks right up with the certainty that we will ‘colonise other planets’, which is to say, that the future we want to imagine is our species tearing through the universe discovering new pristine ecologies to barbarise.

Writing in the 1940s, Asimov came from a generation of science fiction writers who were drunk on the possibilities that new technologies might bring over the next century. His ‘three laws’ of robotics are fictionally from 2058, specifying that robots must not cause or permit harm to humans, must obey the orders of humans, and must protect themselves but never at the expense of a human. In other words, Asimov’s robots are envisioned as perfect mechanical slaves, a theme that Iain M. Banks uncritically embraced as the foundation for his Culture novels.

Yet when we began working on ‘self-driving cars’, the high ideals of digital slavery that motivated the sci-fi authors of the twentieth century went out the windscreen. Immediately the moral question became: should your robot car aim to kill you or the person you are crashing into? So much for Asimov’s Laws. Rather than perfect slaves, the robots we were imagining in the early twenty first century were programmed to choose between negligent homicide and manslaughter. Astonishingly, having these automated vehicles capped to a speed of under thirty miles an hour, such that they need kill nobody, was not even on the table for consideration. The discussion was rather how these wonderful machines we still cannot quite make would be capable of travelling at faster, deadlier speeds.

Babette Babich recently wrote what may be the finest paper about AI I’ve ever encountered. I feel certain almost nobody wants to hear what she says, alas, since it is the truth. Artificial intelligence is an illusion of our own making, and like most such self-deceptions, we don’t need to suspend our disbelief because we cannot help but invest - in both senses - in our fantasies. As a Nietzsche scholar (perhaps the last of her kind, given the necessity of reading both Latin and Greek to do him justice...), this theme of the lies we create in our own image is deeply familiar. Her paper brings out reflections on AI from Nietzsche, despite his never encountering any machinery more complex than a typewriter or a steam locomotive.

From this discussion emerges a novel suggestion for the ethics of our imaginary sentient robots: if they are capable of rational thought, just as we (on a good day) take ourselves to be, they can be subject to the same moral law as us. Hence the epigram above, in which she proposes that Immanuel Kant, having envisioned a categorical imperative binding for all rational beings - from angels to aliens - would provide more than a sufficient basis for ‘robot ethics’. As she says, this would far exceed Asimov’s suggestion to make perfect slaves, and offer instead a world of mutual respect and communal autonomy between all sentient beings, human or otherwise. Of course, we have rejected the worlds implied by such lofty ideals... but it is never too late to recover them.

When it comes to robots, we insist upon seeking ourselves but as a shiny new model - C3PO feels like what we should expect, not a laptop, internet router, or automated car crash. ChatGPT, precisely because it creates an illusion of intelligence by digesting our own texts and reflecting them back at us, fosters the ludicrous idea that sentient robots are just three minutes into the future. The truth, so impossible to accept, is that beings that cannot even live in peace with each other have no business designing other kinds of being. If there is a law of robotics worth adopting, this is surely the strongest candidate.

The Babette Babich paper discussed in this piece was given to me as a pre-print under a slightly a different title, but was published yesterday as “Nietzsche and AI: On ChatGPT and the Psychology of Illusion” at The Philosophical Salon. The epigram above, sadly, does not appear in the final version.

AGREE LORagr_2: We Will Not Colonize Other Planets

“The flat refusal to accept this ranks right up with the certainty that we will ‘colonise other planets’.”

COMMENT

It’s not a certainty that we will not colonize other planets, but it appears to be unlikely. We are creatures of this planet. At the molecular, and probably at the quantum level, our biology is intimately tied to the evolution of life on this planet. Our bodies are ecological systems, not standalone chemical factories.

AGREE LORagr_1: Robotic Sentience Not Plausible

“there is still no sign that sentient robots are even possible with our kinds of computing techniques - much less plausible.”

COMMENT

Yes, I think this is true. By definition a sentient being must be conscious. In general, those of us who are attempting to construct personal mental models of what is, are reasonably sure that consciousness is a feature of complex physical brains. At the moment we don’t know how this works. When we do have at least some inkling of the physical processes that manifest in consciousness, I suspect that some of these processes will lie in the quantum realm. If that is the case it may not even be theoretically possible to create artificial consciousness.[1] Further, if it is possible, it might be the case that such creations will be inherently unpredictable.[2]

Notes

[1] Since currently we don’t know how consciousness is created, scientists and technicians are actively trying to create artificial consciousness. Ask ChatGPT for a few papers published prior to Sept. 2021.

[2] Isn’t this the case for humans?